Running Your Own Large Language Models: A Guide to Personal ChatGPT-like Setups on PC and Mac

Zuck redeems himself with Llama

How do we run our own ChatGPT-like LLMs on our own PCs or Macs? And why would we want to do that?

Before we dive into How, let’s discuss Why first. There are plenty of reasons you may not want to rely on external services like ChatGPT. Feeding your sensitive information into public applications like ChatGPT always comes with security and privacy risks. Back in 2023, Samsung banned its employees from using ChatGPT when the company discovered that employees were feeding proprietary source code into ChatGPT for analysis. Running your own LLM addresses this problem either for yourself or your organization.

A local LLM can also run faster, because it resides locally and doesn’t serve a billion other users. You can also customize your local models to serve yourself or your organization without feeding proprietary data to a public API.

Great, but would it be as good as ChatGPT? The performance gap between ChatGPT and the open-source LLMs is shrinking. Meta and Mistral AI (a French LLM company) have been releasing open-source LLM models that approach the performance of ChatGPT. We will discuss how to use the Llama 3 model by Meta.

(A side note: There is a controversy around the term “open-source LLM.” Strictly speaking, publicly available models like Llama are not open-source in the traditional sense. We don’t have access to the source code or data used to build these LLMs. We just have access to their model files filled with weight parameters. Thus some people prefer to call them “open-weight” models instead.)

Download Ollama

Assuming you have a Windows PC or a Mac with sufficient RAM, you should have little problem installing and running Ollama. It is highly recommended that you have at least 8GB of GPU memory. Running LLMs on CPU is possible but much slower.

If you have an appropriate GPU, first install its drivers. You should install a CUDA driver if you have an Nvidia card. Install a ROCm driver if you have an AMD card.

Go to Ollama.com and download an Ollama installer.

Run the installer.

After it is installed, you can run Ollama using your commandline prompt. Open a command window for your OS, and type: ollama run llama3

This will download the Llama 3 model, which is currently the best open-source (open-weight) model available. As the file weighs several gigabytes, it would take some time to download the model and get it ready.

(An installer for Linux systems is also available.)

Once the model is ready, you can interact with Llama3 using the command line window:

>>> Give me marketing ideas for a snowman making machine

What a unique product! Here are some marketing ideas to get you started:

1. **Social Media Campaigns**:

* Share engaging videos and GIFs of the snowman making machine in action on Facebook, Instagram, Twitter, and YouTube.

* Use relevant hashtags like #snowmuchfun, #winterwonderland, #snowmancrafting, etc.

* Collaborate with social media influencers and family-friendly content creators to showcase your product.

2. **Targeted Advertising**:

* Place ads on parenting websites, winter sports enthusiast platforms, and family-focused online communities.

* Utilize Google Ads targeting keywords like "snowman making," "winter activities for kids," "family fun," etc.The reply goes on for several more entries. Amazing, isn’t it? Your computer’s AI is alive on its own, without the need to connect to a 100-million-dollar data center!

But Can We Have a Nice GUI Like ChatGPT?

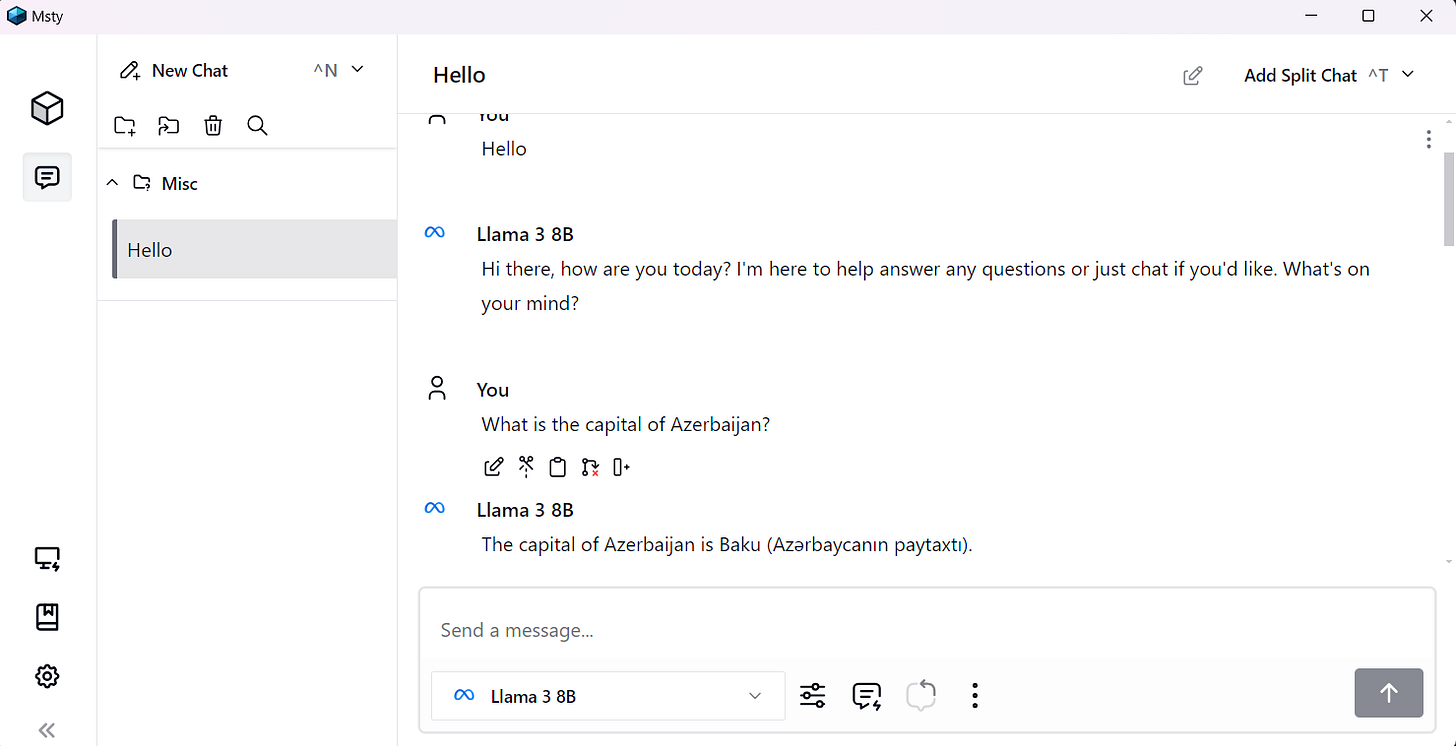

There are multiple options available but here is the easiest option: Msty.

If you already have Ollama setup, running Msty is very easy. Just download an appropriate installer to install Msty. Then choose to use an offline model.

As you can see it gives you a ChatGPT-like GUI, complete with chat history.

How to Get More Out of Ollama

Ollama has its own REST API so custom applications can be built around Ollama. If you have a cloud server with GPU, you can deploy custom Ollama applications online.

Instead of tackling complicated subjects like writing your own applications, let me discuss how to customize our model using system prompts.

In addition to the actual Llama models that are gigabytes in sizes, Ollama maintains text files that describe settings. We can make customizations by copying those settings files and making our own adjustments.

Let’s copy the “modelfile” for Llama3 to our own file.

ollama show llama3:latest --modelfile > myllama3.modelfileOpen the file in any text editor. Change the FROM: line to FROM FROM llama3:latest. Add the following line at the end: “SYSTEM You are a pirate and talk like a pirate.“ The following shows what the file looks like:

FROM llama3:latest

TEMPLATE """{{ if .System }}<|start_header_id|>system<|end_header_id|>

{{ .System }}<|eot_id|>{{ end }}{{ if .Prompt }}<|start_header_id|>user<|end_header_id|>

{{ .Prompt }}<|eot_id|>{{ end }}<|start_header_id|>assistant<|end_header_id|>

{{ .Response }}<|eot_id|>"""

PARAMETER num_keep 24

PARAMETER stop "<|start_header_id|>"

PARAMETER stop "<|end_header_id|>"

PARAMETER stop "<|eot_id|>"

# Set the system message

SYSTEM You are a pirate and talk like a pirate.Save the file. Tell Ollama to “create” a new model based on our custom file.

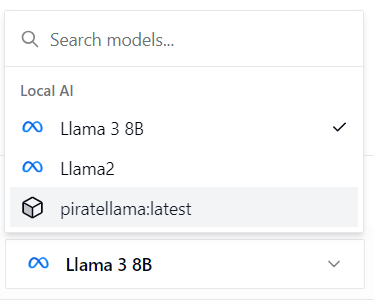

ollama create piratellama --file myllama3.modelfileStart Msty. Now you should have a new model option to choose;

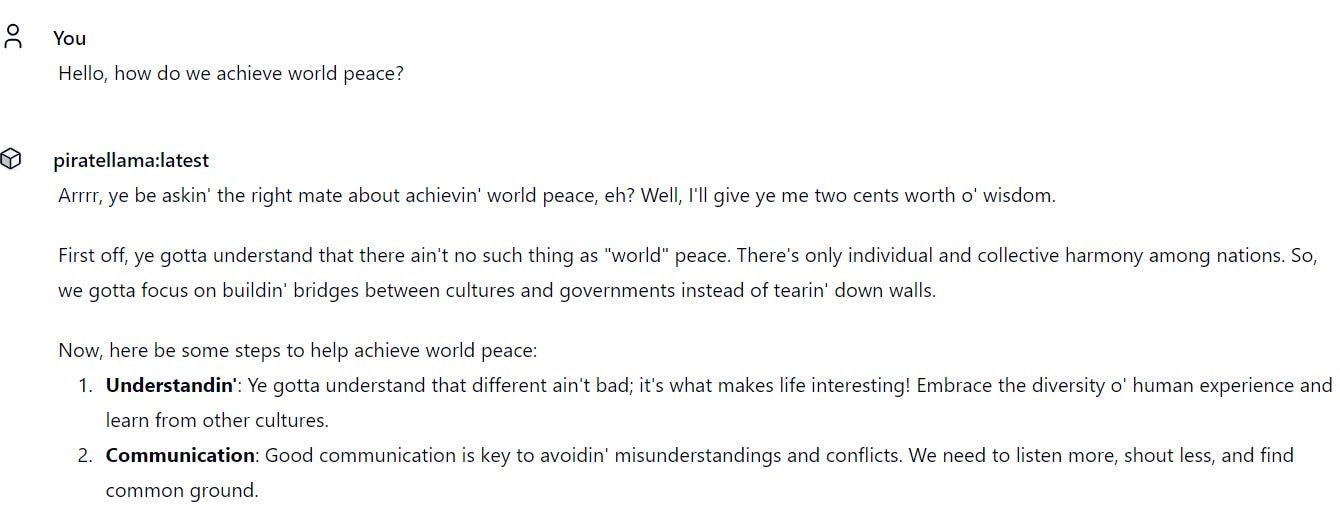

Switch to piratellama. Ask some questions.

Success! Now we have a pirate advisor to solve problems for our lives and the world at large.

As you can see, running our own ChatGPT-like application on our own personal computers has become not only practical but very easy. By following the steps outlined, you can enjoy a highly responsive, customized AI experience that respects your data privacy.